In this post, I am going to talk about specific type of take-away game. Lucky for us, it deals with theories and examples from both "The game of Nim" (where two players take turns removing sticks from a pile, thank you Bayard) and the Sprague-Grundy theories (which allows us to find P-positions and N-positions if you are able to decipher the pattern, thanks you Sam).

Cookie Take-Away! (Yumm)

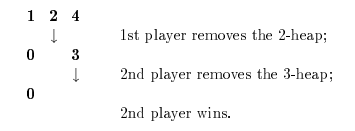

Cookie Take-Away is a combinatorial game where two players take turns removing a single cookie every day for a certain number of days. Some cookies may "go bad" while on the shelf and it becomes unsafe to eat, with each cookie having an expiration date written on it with frosting. A cookie is represented by a heap of n counters, where n is the number of days remaining before the cookie goes bad. So if a cookie has a heap size of 0, then that cookies time has expired. The winner is not the player who eats the most amount of cookies, but the winner is the player who has the joy of eating that last, delicious, mouth watering, cookie.A turn consists of removing a single, non-empty heap (cookie) and reducing all other heaps by 1 number (one day closer to expiration). Once again, the player who removes the last, non-expired cookie, is the winner. So if we were to represent each position in a non-descending order(which will be called the components of the position), a simple example would be [1 2 4] which signifies a three-heap set with heap sizes of 1,2, and 4. The game with [1 2 4] would be played out as follows:

Reduction Theorems

A game that starts with n-heaps must terminate in a finite number of (at most n) moves, one of the two players must have a winning strategy. If the game was just with the set [1], the first player would win no matter what. However, using the same initial set of [1 2 4], the second player would guarantee a win no matter what move the first player would do:

As a refresher, a P-position is a move that "ensures a route" to victory and an N-position is move that allows you to get back to a P-Position.

In the game of Cookie Take-Away, the set of [1] would be an N-type (wins for the next player) and if a set if not an N-position, it is called a P-type (the terminal position or a win for the "previous" player / win for the player who got it to that position), as seen in the set of [1 2 4]. So if one could determine, as the game progressed, if the set was an N or a P type, then that player would be guaranteed a win. If two arbitrary sets of G and H happen to be the same type, then we would say "G~H" or tantamount. So the set of [1 5 6 7] ~ [1 4 4 4] because the size of the heap does not matter if it is greater than the maximum possible moves in a game (the size of n), which is equivalent to the number of heaps at the start of the game. We can also see that the set [1 1 2] ~ [1 2] as only k heaps of size k will be relevant while developing a strategy, since the others will "tarnish" before they can be taken.

Depletion Theorem: If G were a set [ a1, a2, .... , an], then any component of size greater than n may be depleted down to size n without affecting the type of set.

Deletion Theorem: Let G be the set [a1,a2, ... , an]. If ak < k for some k, then the component of a1 may be deleted without affecting the type of set. A Surplus of G is the smallest non-integer s where k - ak < s for all 1 < k < n. So whenever s > 0, the set [ a1, a2, ... as] may all be deleted. This basically says to delete the s smallest components, where s is the surplus of the position.

So [1 2 3 3 6] ~ [ 1 2 3 3 5]. The set has surplus of 4 - a4 = 4 - 3 = 1, thus making it

tantamount to [ 2 3 3 5] or [ 2 3 3 4]

Proofs of these two theorems can be found at this journal entry: here

(I know this may not make much sense right now, but stay with me)

Using both depletion and deletion, one can convert each set into a reduced form of that same set and type. You may be asking "why would I want to do this?" - well hear me out. If we were to replace all the heaps of size > n by heaps that are effectively infinite (denoted by "*"), then we could have cookies that have an "infinite expiration date", which makes it much more clear as to why depletion and deletion is helpful. So when going through these steps, we want to DELETE as many times as possible, and then DEPLETE.

So the set [ 1 2 3 3 6] ~ [ 1 2 3 3 *]. Then you delete the smallest component,

which gives us [ 2 3 3 6] ~ [2 3 3 *]. Then you deplete the largest component,

which gives us [ 2 3 3 4]

So take a look at all of the possible reduced sets which have 4 or less components. You can see that if a set is in the N-position, then the winning move is the move that is circled. (Remember that a move is winning if that move/component leads to a P-position)

Hmmm. This may be an interesting pattern.

Here is a table that shows all sets from 1 2 .... n

If you haven't realized it yet, there are numerous interesting patterns that apply to each of these sets (with the exception of n = 1):

1) For n = 0 mod 3, the set is a P-type.

2) For n = 1 mod 3, the moves that would win you the game are 1, ... , 2/3 (n - 1)

3) For n = 2 mod 3, the moves that would win you the game are 2/3(n + 1), ... , n.

So....The table below gives a value for both a and n, where the winning moves are the ones shown. If it is blank, that means there is no possible winning move because that set is a P-type.

So....The table below gives a value for both a and n, where the winning moves are the ones shown. If it is blank, that means there is no possible winning move because that set is a P-type.

**Consistent pattern all the way up to n = 20, stopped as author saw sufficient data**

The line shown in the table divides it up into three segments. There is one containing the set [1], and two that are infinite, and are determined by whether or not n is > 2a - 2 or < 2a - 2.

However, you can still follow the simple rule (although it may not be that clear) of: if the largest move is congruent to 0 mod 3, 1 mod 3, or 2 mod 3, then you would either select indifferently, move with the smallest number possible, or move with the largest number possible. (Further Explained in greater detail in Proof link)

Sprague-Grundy Values!

Above is what the graph that you just saw in table 3, but converted into the Sprague-Grundy values. Here are some things that you should note down from this new board for figuring out values that go beyond this board:

- Above the drawn in diagonal line (n > 2a - 2), you see that the values stay consistent on the northeast-to-southwest diagonal.

- Also above the drawn in diagonal line, you will notice that the same repeating periodic sequence (Shout out to Lauren Q.) of 0,1,2,0,1,3,...,0,1,2,0,1,3.

- Below the diagonal drawn in line, the grundy values remain consistent as you go down a column.

What do these values tell you?

First, you need to know what strict positions are. Strict positions are sets that range from a1 to an, they have grundy values of 0,1,2, or 3, and all of its components are distinct, excluding those components of size n. (This is the case because of the Depletion theory explained earlier. The set [ 2 3 4 ] ~ [ 2 3 3 ].)

Winning Conjecture!

**variables with parentheses next to them should be depicted as subscripts

The winning conjecture says that: If G is a strict position of N-type, then the winning components of G are exactly those whose size lie in a specific "winning interval", which would be a(i), a(i+1), ..., a(j), as long as that list of natural numbers are uninterrupted. But this ONLY works for strict positions. For example, the set [ 1 2 2 3 6 6 ] does not have a winning conjecture because the winning moves are both the 2's and the 6's.

Final Thoughts?

And thats, that! Cookie Take-Away is a great fun game as it deals the factor of "expiration" to the already popular variation of the game Nim. If you're able to think ahead to where you could set yourself up to eat the last, healthy cookie, then you could be quite good at this game. But its interesting to think about if the same patterns on the grundy table follow all the way out to infinity, I mean who knows right? Anyways, Ive been writing this blog about cookies for a solid amount of hours now so Im kind of hungry. What are your favorite flavors of cookie? Let me know below!

Best of wishes,

Jon D.

Jon D.

http://faculty.uml.edu/jpropp/cookie1.pdf

http://faculty.uml.edu/jpropp/cookie2.pdf