Last

class, we briefly spoke about the capture time when introducing the original

cops and robbers game. Capture time determines how long it takes

or how many moves are needed in order for a cop to capture a robber. To find

the length of the game, we must find the number of rounds it takes for a cop to

capture a robber, with one round being a move by a robber followed by a move by

a cop.

The

first theorem that explains the maximum time it will take for a cop to catch a

robber is: If G is a cop-win graph of order n≥7, then the capt(G) ≤ n-4. This

states that it will take no more than n-4 moves for a cop to capture a robber.

In class

yesterday, we talked about the theorem that states that: G is a cop-win graph

if and only if G is dismantlable. A graph is dismantlable if it can continue to be broken down or dismantled into a graph that is a cop-win graph. In case anyone has forgotten, a 2-dismantlable

graph is a graph that has two separate corners or has fewer than 7 vertices. Having 2 separate corners means that each vertex that is a corner is dominated/followed by a separate vertex.

There exists another theorem that helps us

figure out the capture time for any cop-win and 2-dismantable graph. This

theorem is found in both of the readings that I used for this post.

Theorem: If G is 2-dismantable of order n, then capt(G) ≤

n/2.

Here is a brief version of the proof, which is done by induction.

Proof:

Let a and b be 2 separate corners in a

2-dismantable graph or order n≥7 followed by a’ and b’ (a' and b' are vertices which dominate the 2 corners a and b)

Form a new graph, H, by deleting vertices a and

b

H is 2-dismantable, so there is an optimal game

on H of length at most (n-2)/2. This length is (n-2)/2, because we are taking away two vertices (a and b) and applying this to our induction hypothesis.

Cop plays this game in H and for x∈{a,b}

whenever R is on x, then C plays as if he were on x’

After no greater than (n-2)/2 moves either the

Robber is caught, or R is on x and C is on x’, so the cop can capture the

robber in just one more move

(n-2)/2 +1= n/2 moves

So we find that the capture time of any

2-dismantable graph is ≤ n/2

This theorem does not apply to all cop-win

graphs though, only those that are 2-dismantable.

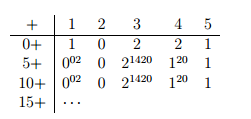

We can construct an infinite family of graphs of order n that will have a capture time no greater than n-4. We construct a graph so that all vertices are only neighboring other vertices that are 4, 3, or 1 values away from its value. We will use this graph to determine a strategy for capturing a robber in n-4 moves. This following strategy only works on this specific type of graph, but this graph is derived from our first theorem that the capture time of G is no greater than n-4 moves.

This strategy only works for a fixed integer n≥7

that have the following properties:

1)

The

graph H(n) is planar

2)

The

graph H(n) is cop-win and has a unique corner.

3)

Capt(H(n))=n-4

Each vertex in the graph H(n) that is

characterized by 5≤ x≤ n-4 has neighbors {x+4, x+3, x+1, x-1, x-3, x-4}. This

means that the cop and robber may move to vertices with index 1, 3, 4, more or

less than their current index. The following strategy can be used in these

specific types of graphs to find that the capture time will be n-4.

Strategy:

1)

In 0th

round, place cop on vertex 1

2)

After the

robber places himself on i>1, in the 0th round, move cop to j∈V(G(4))

with j∈{2, 3, 4, 5} so that i=j(mod4)

3)

This

step will be repeated until the cop captures the robber

a.

If the

robber moves from i to i+k, then the cop will move from j to j+k, where k=1, 3,

or 4

b.

If the

robber moves from i to i-1, then the cop moves from j to j+3.

c.

If the robber

moves from i to i-3, then the cop moves from j to j+1

d.

If the

robber moves from i to i-4, then the cop moves from j to j+4

I will show how this strategy works on a graph

H(11) tomorrow in class, but basically by using this strategy, the cop should

capture the robber in exactly n-4 moves. So in the case of H(11), it should

take 7 moves for the cop to capture the robber.

There

are many other graphs that are non-isomorphic to H(n) with capture of n-4.

Figuring out the maximum capture time of a specific cop-win game is in P- which

means it is solvable. These are just the basics for capture time in the

original cops and robbers game. There are a lot of more interesting things

about capture time in variants of cops and robbers- some which we talked about

yesterday in class. If anyone is interested in looking further into capture

time, there are many potential ideas that could be used for a final project!

References:

The Game of Cops and Robbers on Graphs by Bonato & Nowakowski, § 8.6

http://www.researchgate.net/publication/220185303_The_capture_time_of_a_graph

References:

The Game of Cops and Robbers on Graphs by Bonato & Nowakowski, § 8.6

http://www.researchgate.net/publication/220185303_The_capture_time_of_a_graph